Containers: Technology Landscape

This post looks at the history of containerization and shows the technology landscape that makes it possible.

This post looks at the history of containerization and shows the technology landscape that makes it possible.

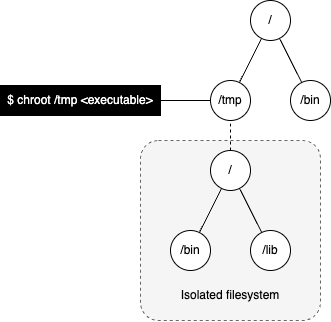

Filesystem Isolation

In 1979, during the development of Version 7 Unix, the chroot(2) system call was introduced - this provided filesystem isolation by changing the root directory of the calling process and its child processes. In other words, once we change the root filesystem for a process it is no longer able to access any files outside of the new "root".

Nowadays, pivot_root(2) is preferred over chroot since a chroot can easily be undone through the double chroot technique as described in CVE-2004-2073.

By the early 2000's, chroot was expanded upon by virtualizing access to the filesystem, users, and the networking subsystems. Chroot was added to FreeBSD 4.0 as jails. Around the same time, Linux-VServer was created with isolation as a main feature, amongst others. Oracle Solaris created Solaris Containers, now known as Oracle Solaris Zones.

This gives us the first building block that is necessary to run a container - filesystem isolation.

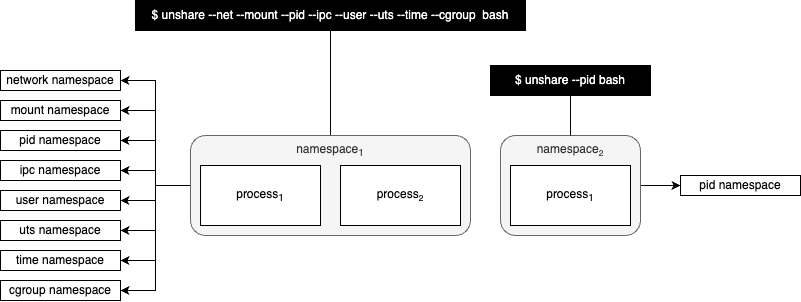

Process Isolation

In 2002, Linux namespaces was introduced into the Linux Kernel 2.4.19 inspired by Plan 9 from Bell Labs. Linux namespaces presents, for example, the pid_namespace to a set of processes in a namespace as its own isolated instance.

Figure 2 below shows that namespace1 gets its own process id namespace and namespace2 gets its own process id namespace. In other words, namespace1 will start numbering its processes from 1 upwards and namespace2 will also number its processes starting from 1 upwards.

There are several different namespace types, viz., cgroup, IPC, network, mount, PID, time, user and UTS. A namespace is created with the unshare(1) command.

This gives us the second building block that is necessary to run a container - process isolation.

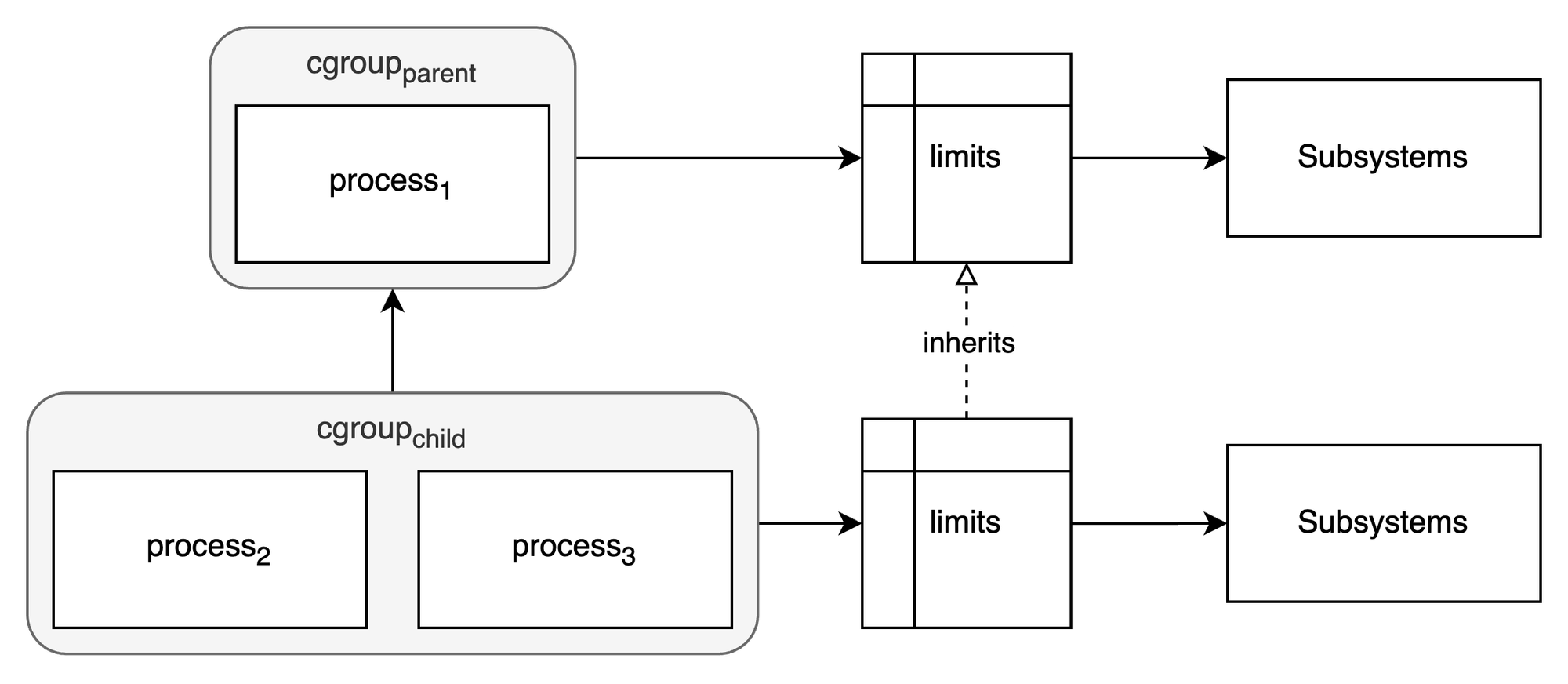

Resource Control

In 2006, Google created process containers, which was later renamed to Control Groups or cgroups which was merged into the Linux kernel in 2007.

Control groups organize processes into groups whose resource usage can be monitored and limited. There are two versions of cgroups viz., cgroups v1 and cgroups v2 where v2 provides a unified hierarchy and a simplified API. Figure 3 below shows the limits of a child cgroup being inherited from the parent cgroup for the a specific set of subsystems.

This gives us the third building block that is necessary to run containers - resource control.

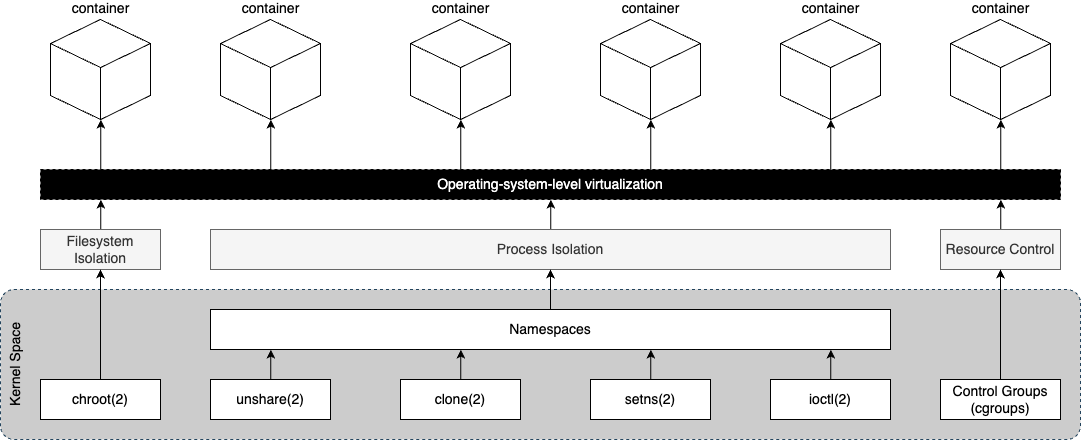

Operating-System-Level Virtualization

Through the composition of the above three building blocks we now have operating-system-level virtualization.

Figure 4 below shows the system calls in the kernel that enables filesystem isolation and process isolation via namespaces. It also shows that cgroups is part of the kernel space providing resource control.

Containers are, essentially, isolated user space instances and even though it is entirely possible to craft and run containers by hand using chroot(1), unshare(1), cgcreate(1), cgset(1), cgget(1) and cglassify(1) it is not convenient - we need tools.

Open Container Initiative (OCI)

In 2015, the Linux Foundation started the Open Container Initiative (OCI) who maintains three open specifications for container technology, namely, a runtime specification, an image specification and, a distribution specification. The OCI also maintains a CLI tool for spawning and running containers according to the OCI specification called runc - originally donated to the OCI by Docker.

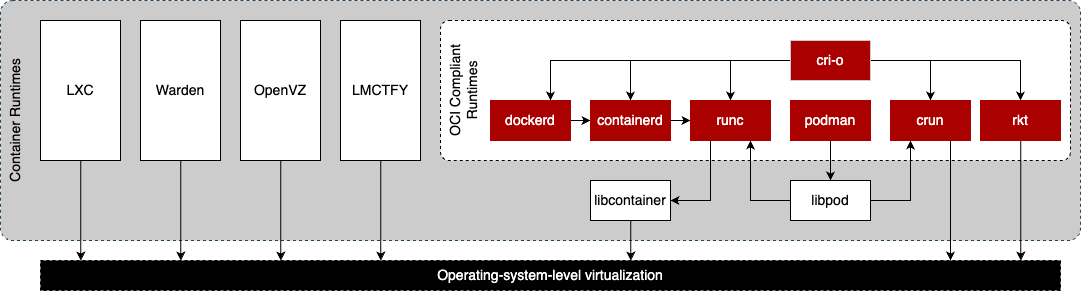

Container Runtime

A container runtime is responsible for running containers and managing the container lifecycle.

Rewind to around 2005 when SWsoft (now known as Virtuozzo) released OpenVZ which enabled the creation of multiple isolated containers known as Virtual Private Servers (VPS).

In 2008, Linux Containers (LXC) developed an API and a set of command line utilities to run and manage Linux containers without the need for a separate kernel.

Cloud Foundry started Warden in 2011, as an API for managing isolated environments or, containers. Interestingly, early versions of Warden used LXC but was later replaced with a bespoke implementation. The difference between LXC and Warden is that LXC was tied to Linux whereas Warden can be implemented on any operating system that supports isolation.

In 2013, Google started Let Me Contain That For You (LMCTFY) - its open-source container stack. It aimed to provide a container abstraction through a high-level API built around user intent. In 2015, development stopped on LMCTFY and Google started contributing to libcontainer which is now part of the Open Container Initiative (OCI).

Around the same time, in 2013, Docker popularized containers with an ecosystem to run and manage containers and to build and retrieve images (pre-built containers). Docker, in its early days, also used LXC but later replaced it with libcontainer.

In 2014, CoreOS created rkt, pronounced "rocket", as an alternative to Docker. Unlike Docker, rkt used the App Container specification (appc). Active development on the App Container Specification stopped in 2016.

CRI-O was created around 2016, specifically for Kubernetes, as an OCI-compliant lightweight, container runtime interface.

In 2017, Docker, Inc. separated the Docker runtime out of Docker into a new project called containerd and donated it to the Cloud Native Computing Foundation (CNCF). Nowadays, Docker depends on containerd to manage the container environment and its lifecycle.

Around the middle of 2018 Podman was released for the first time as an alternative to Docker.

Other Container Runtimes

There are several other container runtimes such as Kata Containers, Windows Containers, RunV and systemd-nspawn. These container runtimes were not mentioned as they have a different execution mechanism and typically rely on hypervisors, except for systemd-nspawn.

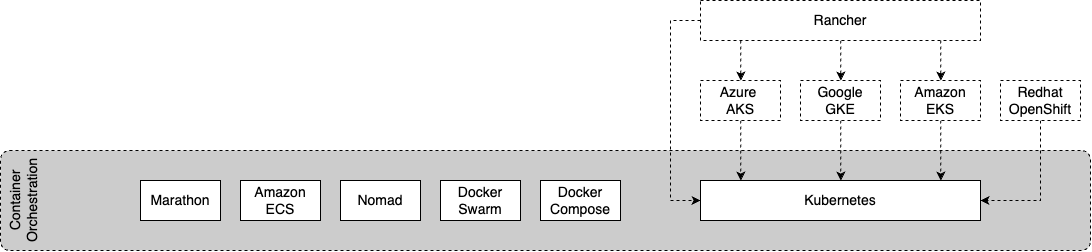

Container Orchestration

Container orchestration automates the management and coordination of containers at scale.

Marathon is a container orchestration platform that runs on Apache Mesos and was started around 2013.

In 2014, Docker released Docker Compose and Docker Swarm to run multi-container applications. The difference between the two is that Docker Compose manages multi-container applications on a single host whereas Docker Swarm orchestrates multi-container applications across several hosts. In other words, Docker Compose is more suited to development and testing and Docker Swarm for production workloads. Docker Swarm evolved and is now part of the Docker Engine as Docker Swarm Mode and Docker Swarm "Classic" is no longer being actively developed.

Around the same time, in 2014, Google released Kubernetes to orchestrate multi-container applications across hosts. Kubernetes was later donated to the Cloud Native Computing Foundation (CNCF) in 2015.

Towards the end of 2014, Amazon AWS launched Amazon Elastic Container Services (ECS) that integrates with other AWS services.

In 2015, Hashicorp Nomad, which can manage containerized and non-containerized workloads, was open sourced.

Many of the major cloud providers today provide Kubernetes-as-a-Service or, managed Kubernetes services. For example, Amazon EKS, Google GKE and Azure AKS.

Rancher, a centralized platform for managing multiple Kubernetes clusters was open sourced in 2015.

Container Image

Around 2013, Docker created container images as part of its ecosystem and revolutionized the way in which applications are packaged.

A container image is an unchangeable, static file that includes executable code so that it can run an isolated process. Container images contain all the necessary system libraries, system tools and other settings a software program needs to run on a containerization platform.

In 2015, Docker donated its container image format to the Open Container Initiative (OCI). The image specification is available on GitHub.

Container Distribution

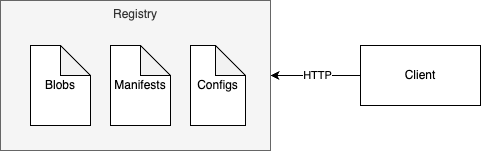

The OCI Container Distribution Specification defines a protocol for the distribution of container images that is based on the Docker Registry HTTP API V2 protocol.

Figure 7 below shows that a container registry consists of blobs, manifests and configs which is accessed via HTTP.

In 2016, Docker donated the distribution specification to the Open Container Initiative (OCI).

Summary

This post looked at the history of containerization to show the relationships between all the technologies that makes containerization possible.

Feel free to download the technology landscape diagram below: